Evolution of Operation, Administration, and Maintenance (OA&M)

Intelligent Network (IN)

Integrated Services Digital Network (ISDN)

The need for data communications services grew throughout the 1970s. These services were provided (mostly to the companies rather than individuals) by the X.25-based packet switched data networks (PSDNs). By the early 1980s it was clear to the industry that there was a market and technological feasibility for integrating data communications and voice in a single digital pipe and opening such pipes for businesses (as the means of PBX access) and households. The envisioned applications included video telephony, online directories, synchronization of a customer’s call with bringing the customer’s data to the computer screen of the answering agent, telemetrics (that is, monitoring devices—such as plant controls or smoke alarms—and automatic reporting of associated events via telephone calls), and a number of purely voice services. In addition, since the access was supposed to be digital, the voice channels could be used for data connections that would provide a much higher rate than had ever been possible with the analog line and modems.

The ISDN telephone (often called the ISDN terminal) is effectively a computer that runs a specialized application. The ISDN telephone always has a display; in some cases it even looks like a computer terminal, with a screen and keyboard in addition to the receiver and speaker. Several such terminals could be connected to the network terminator (NT) device, which can be placed in the home or office and which has a direct connection to the ISDN switch. Non-ISDN terminals (telephones) can also be connected to the ISDN via a terminal adapter. As far as the enterprise goes, a digital PBX connects to the NT1, and all other enterprise devices (including ISDN and non-ISDN terminals and enterprise data network gateways) terminate in the PBX.

These arrangements are depicted on the left side of Figure 1. The right side of the figure shows the partial structure of the PSTN, which does not seem different at this level from the pre-ISDN PSTN structure. This similarity is no surprise, since the PSTN had already gone digital prior to the introduction of the ISDN. In addition, bringing the ISDN to either the residential or enterprise market did not require much rewiring because the original twisted pair of copper wires could be used in about 70 percent of subscriber lines (Werbach, 1997). What has changed is that codecs moved at the ultimate point of the end-to-end architecture—to the ISDN terminals—and the local offices did need to change somewhat to support the ISDN access signaling standardized by ITU-T. Again, common channel signaling predated the ISDN, and its SS No. 7 version could easily perform all the functions needed for the intra-ISDN network signaling.

As for the digital pipe between the network and the user, it consists of channels of different capacities. Some of these channels are defined for carrying voice or data; others (actually, there is only one in this category) are used for out-of-band signaling. (There is no in-band signaling even between the user and the network with the ISDN.) The following channels have been standardized for user access:

-

A. 4-kHz analog telephone channel.

-

B. 64-kbps digital channel (for voice or data).

-

C. 8- or 16-kbps digital channel (for data, to be used in combination with channel A).

-

D. 16- or 64-kbps digital channel (for out-of-band signaling).

-

H. 384-, 1536-, or 1920-kbps digital channel (which could be used for anything, except that it is not part of any standard combination of channels).

The major regional agreements support two combinations:

-

Basic rate interface. Includes two B channels and one D channel of 16 kbps. (This combination is usually expressed as 2B+D.)

-

Primary rate interface. Includes 23 B channels and 1 D channel of 64 kbps. (This combination is accordingly expressed as 23B+D, and it actually represents the primary rate in the United States and Japan. In Europe, it is 30B+D.)

The ISDN has been deployed throughout mostly for enterprise use. The residential market has never really picked up, although there has been a turnaround because of the demand for fast Internet access (it is possible to use the 2B+D combination as a single 144-kbps digital pipe) and because ISDN connections are becoming less expensive.

Even before the ISDN standardization was finished, the ISDN was renamed narrowband ISDN (N-ISDN), and work began on broadband ISDN (B-ISDN). B-ISDN will offer an end-to-end data rate of 155 Mbps, and it is based on the asynchronous transfer mode (ATM) technology. B-ISDN is to support services like video on demand—predicted to be a killer application; however, full deployment of B-ISDN means complete rewiring of houses and considerable change in the PSTN infrastructure.

Although the ISDN has recently enjoyed considerable growth owing to Internet access demand, its introduction has been slow. The United States until recently trailed Europe and Japan as far as deployment of the ISDN is concerned, particularly for consumers. This lag can in part be explained by the ever complex system of telephone tariffs, which seemed to benefit the development of the business use in the United States. Another explanation often brought up by industry analysts is leapfrogging: by the time Europe and Japan developed the infrastructure for total residential telephone service provision, the ISDN technology was available, while in the United States almost every household already had at least one telephone line long before the ISDN concept (not to mention ISDN equipment) existed

Evolution of Signaling

Evolution of Switching

As noted, the first switch was a switching matrix (board) operated by a human. The 1890s saw the introduction of the first automatic step-by-step systems, which responded to rotary dial pulses from 1 to 10 (that is, digits 1 through 9 to 0). Cross-bar switches, which could set up a connection within a second, appeared in late 1930s. Step-by-step and cross-bar switches are examples of space-division switches; later, this technology evolved into that of time-division. A large step in switching development was made in the late 1960s as a consequence of the computer revolution. At that time computers were used for address translation and line selection. By 1980, stored program control as a real-time application running on a general-purpose computer coupled with a switch had become a norm.

At about the same time, a revolution in switching took place. Owing to the availability of digital transmission, it became possible to transmit voice in digital format. As the consequence, the switches went digital. For the detailed treatment of the subject, we recommend Bellamy (2000), but we are going to discuss it here because it is at the heart of the matter as far as the IP telephony is concerned. In a nutshell, the switching processes end-to-end voice in these four steps:

-

A device scans in a round-robin fashion all active incoming trunks and samples the analog signal at a rate of 8000 times a second. The sampled signal is passed to the coder part of the coder/decoder device called a pulse-code modulation (PCM) codec, which outputs an 8-bit string encoding the value of the electric amplitude at the moment of the sample

-

Output strings are fed into a frame whose length equals 8 times the number of active input lines. This frame is then passed to the time slot interchanger, which builds the output frame by reordering the original frame according to the connection table. For example, if input trunk number 3 is connected to output trunk number 5, then the contents of the 3rd byte of the input frame are inserted into the 5th byte of the output frame. (There is a limitation on the number of lines a time slot interchanger can support, which is determined solely by the speed at which it can perform, so the state of the art of computer architecture and microelectronics is constantly applied to building time slot interchangers. The line limitation is otherwise dealt with by cascading the devices into multistage units.)

-

On outgoing digital trunk groups, the 8-bit slots are multiplexed into a transmission carrier according to its respective standard. (We will address transmission carriers in a moment.) Conversely, a digital switch accepts the incoming transmission frames from a transmission carrier and switches them as described in the previous step.

-

At the destination switch, the decoder part of the codec translates the 8-bit strings coming on the input trunk back into electrical signals.

Note that we assumed that digital switches were toll offices (we called both incoming and outgoing circuits trunks). Indeed, initially only the toll switches on the top of the hierarchy went digital, but then digital telephony moved quickly down the hierarchy, and in the 1980s it migrated to the central offices and even PBXs. Furthermore, it has been moving to the local loop by means of the ISDN and digital subscriber line (DSL) technologies addressed further in this part.

The availability of digital transmission and switching has immediately resulted in much higher quality of voice, especially in cases where the parties to a call are separated by a long distance (information loss requires the presence of multiple regenerators, whose cumulative effect is significant distortion of analog signal, but the digital signals are fairly easy to restore—0s and 1s are typically represented by a continuum of analog values, so a relatively small change has no immediate, and therefore no cumulative, effect).

We conclude this section by listing the transmission carriers and formats. The T1 carrier multiplexes 24-voice channels represented by 8-bit samples into a 193-bit frame. (The extra bit is used as a framing code by alternating between 0 and 1.) With data rates of 8000 bits per second, the T1 frames are issued every 125 ms. The T1 data rate in the United States is thus 1.544 Mbps. (Incidentally, another carrier, called E1, which is used predominantly outside of the United States, carries thirty-two 8-bit samples in its frame.)

T1 carriers can be further multiplexed bit by bit into higher-order carriers, with extra bits added each time for synchronization:

-

Four T1 frames are multiplexed into a T2 frame (rate: 6.312 Mbps)

-

Six T2 frames are multiplexed into a T3 frame (rate: 44.736 Mbps)

-

Six T3 frames are multiplexed into a T4 frame (rate: 274.176 Mbps)

The ever increasing power of resulting pipes is depicted in Figure 1

Structure of the PSTN

At the very beginning of the telephony age, telephones were sold in pairs; for a call to be made, the two telephones involved had to be connected directly. So, in addition to the grounding wire, if there were 20 telephones you wanted to call (or that might call you), each would be connected to your telephone by a separate wire. At certain point, it was clear that a better long-term solution was needed, and such a solution came in the form of the first Bell Company switching office in New Haven, Connecticut. The office had a switching board, operated by human operators, to which the telephones were connected. An operator’s job was to answer the call of a calling party, inquire as to the name of the called party, and then establish the call by connecting with a wire two sockets that belonged to the calling and called party, respectively. After the call was completed, the operator would disconnect the circuit by pulling the wire from the sockets. Note that no telephone numbers were involved (or needed). Telephone numbers became a necessity later, when the first automatic switch was built. The automaton was purely mechanical—it could find necessary sockets only by counting; thus, the telephones (and their respective sockets in the switch) were identified by these telephone numbers. Later, the switches had to be interconnected with other switches, and the first telephone network—the Bell System in the United States—came to life. Other telephone networks were built in pretty much the same way.

Many things have happened since the first network appeared—and we are going to address these things—but the structure of the PSTN in terms of its main components remained unchanged as far as the establishment of the end-to-end voice path is concerned. The components are:

-

Station equipment [or customer premises equipment (CPE)]. Located on the customer’s premises, its primary functions are to transmit and receive signals between the customer and the network. These types of equipment range from residential telephones to sophisticated enterprise private branch exchange systems (PBXs).

-

Transmission facilities. These provide the communications paths, which consist of transmission media (atmosphere, paired cable, coaxial cable, light guide cable, and so on) and various types of equipment to amplify or regenerate signals.

-

Switching systems. These interconnect the transmission facilities at various key locations and route traffic through the network. (They have been called switching offices since the times of the first Connecticut office.)

-

Operations, administration, and management (OA&M) systems. These provide administration, maintenance, and network management functions to the network.

Until relatively recent times, switching boards remained in use in relatively small organizations (such as hotels, hospitals, or companies of several dozen employees), but finally were replaced by customer premises switches called private branch exchanges (PBXs). The PBX, then, is a nontrivial, most sophisticated example of station equipment. On the other end of the spectrum is the ordinary single-line telephone set. In addition to transmitting and receiving the user information (such as conversation), the station equipment is responsible for addressing (that is, the task of specifying to the network the destination of the call) as well as carrying other forms of signaling [idle or busy status, alerting (that is, ringing), and so on].

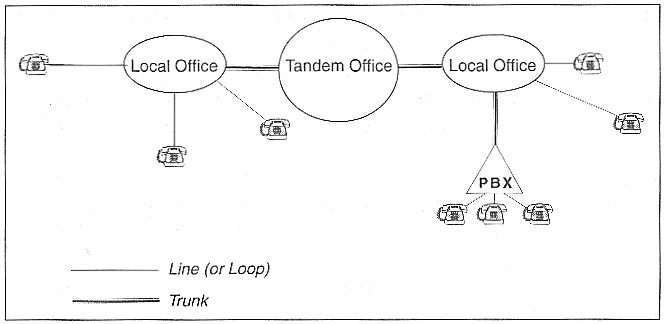

As Figure 1demonstrates, the station equipment is connected to switches. The telephones are connected to local switches (also interchangeably called local offices, central offices, end offices, or Class 5 switches) by means of local loop circuits or channels carried over local loop transmission facilities. The circuits that interconnect switches are called trunks. Trunks are carried over interoffice transmission facilities. The local offices are, in turn, interconnected to toll offices (called tandem offices in this case). Finally, we should note that in all this terminology the word office is interchangeable with exchange, and, of course, switch. It is very difficult to say which word is more widely used.

The trunks are grouped; it is often convenient to refer to trunk groups, which are assigned specific identifiers, rather than individual trunks. Grouping is especially convenient for the purposes of network management or assignment to transmission facilities. (A trunk is a logical abstraction rather than a physical medium; a trunk leaving a switch can be mapped to a fiber-optic cable on the first part of its way to the next switch, microwave for the second part, and four copper wires for the third part.)

In the original Bell System, there were five levels in the switching hierarchy; this number has dropped to three due to technological development of nonhierarchical routing (NHR) in the long-distance network. NHR was not adopted by the local carriers, however, so they retained the two levels—local and tandem—of switching hierarchy.

Local switches in the United States are grouped into local access and transport areas (LATAs). You can find a current map of LATAs at www.611.net/NETWORKTELECOM/lata_map/index.htm. A LATA may have many offices (on the order of 100), including tandem offices. Service within LATAs is typically provided by local exchange carriers (LECs). Some LECs have existed for a considerable time (such as original Bell Operating Companies, created in 1984 as a result of breakup of the Bell system), and so are called incumbent LECs (ILECs); others appeared fairly recently, and are called competitive LECs (CLECs). Inter-LATA traffic is carried by inter-exchange carriers (IXCs). The IXCs are connected to central or tandem offices by means of points of presence (POPs).

Figure 1 depicts an interconnection of an IXC with one particular LATA. The IXC switches form the IXC network, in which routing is typically nonhierarchical. Presently, IXCs are providing local service, too; however, since their early days IXCs have had direct trunks to PBXs of large companies to whom they provided services like virtual private networks (VPNs).[3] We should mention that IXCs in the United States can (and do) interconnect with the overseas long-distance service providers by means of complex gateways that perform call signaling translations, but the IXCs in the United States are typically not directly interconnected with each other.